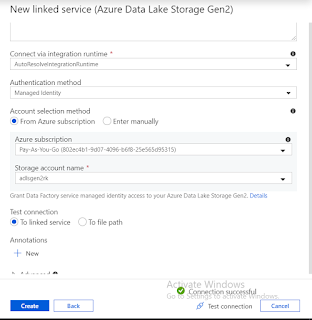

Recently I have have been copying data from Azure Data Factory Copy activity to Oracle (on-premise) using Self-Hosted IR. After few days starting getting below error.

{

"errorCode": "BadRequest",

"message": "Operation on target CD_SQL_ORA failed: Failure happened on 'Sink' side. ErrorCode=UserErrorOdbcOperationFailed,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=ERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver]Error tolerance exceeded. Bulk load operation terminated.

Error in parameter 4.\r\nERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver][Oracle]Invalid Position. Error in row 10001. Error in parameter 4.,Source=Microsoft.DataTransfer.ClientLibrary.Odbc.OdbcConnector,''Type=Microsoft.DataTransfer.ClientLibrary.Odbc.Exceptions.OdbcException,Message=ERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver]Error tolerance exceeded. Bulk load operation terminated. Error in parameter 4.\r\nERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver][Oracle]Invalid Position. Error in row 10001. Error in parameter 4.,Source=msora28.dll,'", "failureType": "UserError", "target": "PL_COPY_CUST", "details": "" }After googling about the error and even contacting Oracle DBA there was not solution found. Contacted Microsoft support as last option. After few trail and error it worked out after changing 'writeBatchSize' from 10,000 (default value) to 50,000.

Again not sure of the reason and there is not much information around it in the documentation. Please let me know if anyone know exact cause of the issue.

Happy Coding!